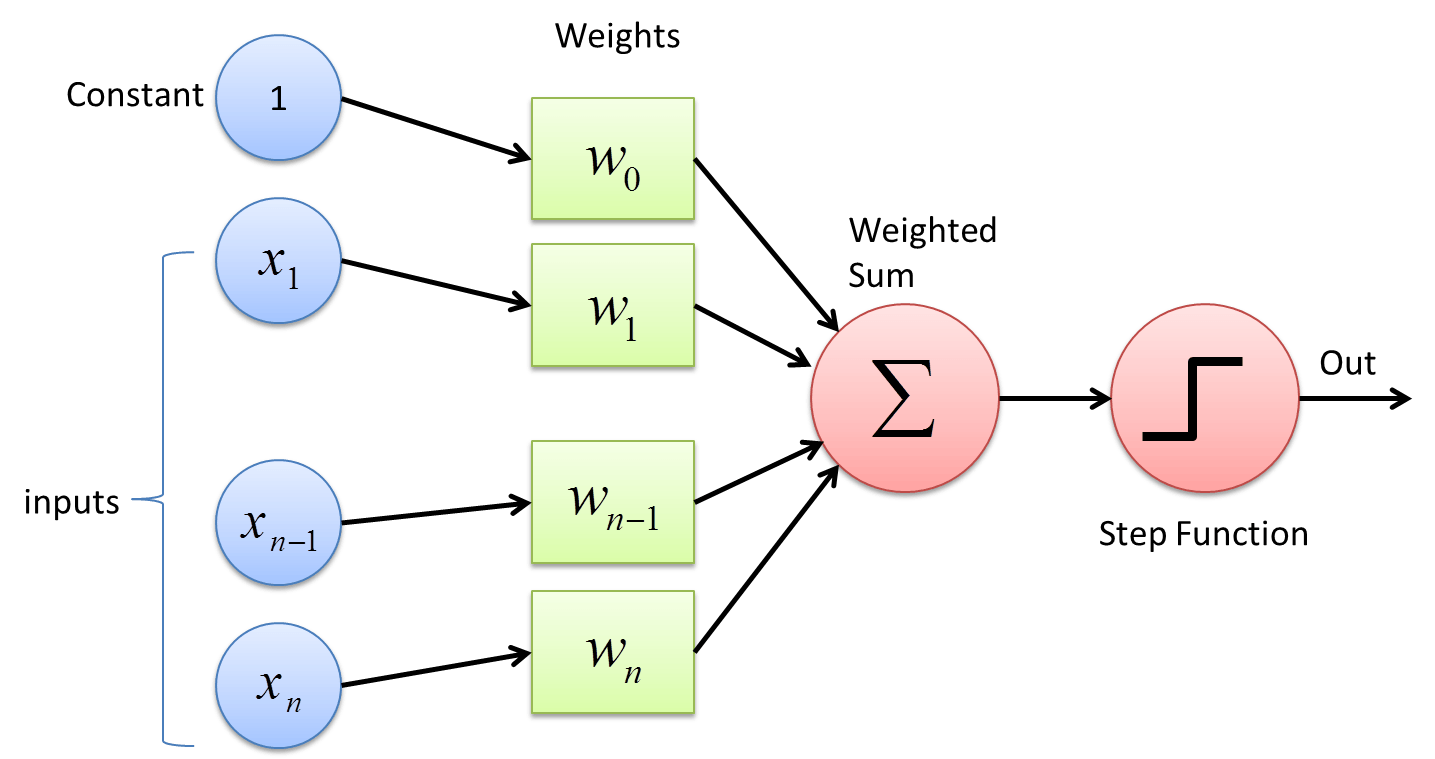

The perceptron is simply a fancy name for the simple neuron model with the step activation function we discussed before. It was among the very first formal models of neural computation and because of its fundamental role in the history of neural networks, it wouldn’t be unfair to call it the “mother of all artificial neural networks”.

It can be used as a simple classifier in binary classification tasks. A method for learning the weights of the perceptron from data, called the Perceptron algorithm, was introduced by the psychologist Frank Rosenblatt in 1957. We will not study the Perceptron algorithm in detail. Suffice to say that it is just about as simple as the nearest neighbor classifier. The basic principle is to feed the network training data one example at a time. Each misclassification leads to an update in the weight.

The history of the debate that eventually lead to almost complete abandoning of the neural network approach in the 1960s for more than two decades is extremely fascinating. The article A Sociological Study of the Official History of the Perceptrons Controversy by Mikel Olazaran (published in Social Studies of Science, 1996) reviews the events from a sociology of science point of view. Reading it today is quite thought provoking. Reading stories about celebrated AI heroes who had developed neural networks algorithms that would soon reach the level of human intelligence and become self-conscious can be compared to some statements made during the current hype. If you take a look at the above article, even if you wouldn't read all of it, it will provide an interesting background to today's news. Consider for example an article in the MIT Technology Review published in September 2017, where Jordan Jacobs, co-founder of a multimillion dollar Vector institute for AI compares Geoffrey Hinton (a figure-head of the current deep learning boom) to Einstein because of his contributions to development of neural network algorithms in the 1980s and later. Also recall the Human Brain project mentioned in the previous section.

According to Hinton, “the fact that it doesn’t work is just a temporary annoyance” (although according to the article, Hinton is laughing about the above statement, so it's hard to tell how serious he is about it). The Human Brain project claims to be “close to a profound leap in our understanding of consciousness“. Doesn't that sound familiar?

No-one really knows the future with certainty, but knowing the track record of earlier announcements of imminent breakthroughs, some critical thinking is advised.

AI hyperbole

After its discovery, the Perceptron algorithm received a lot of attention, not least because of optimistic statements made by its inventor, Frank Rosenblatt. A classic example of AI hyperbole is a New York Times article published on July 8th, 1958:

“The Navy revealed the embryo of an electronic computer today that it expects will be able to walk, talk, see, reproduce itself and be conscious of its existence.”

Please note that neural network enthusiasts are not at all the only ones inclined towards optimism. The rise and fall of the logic-based expert systems approach to AI had all the same hallmark features of an AI-hype and people claimed that the final breakthrough is just a short while away. The outcome both in the early 1960s and late 1980s was a collapse in the research funding called an AI Winter.

With the incorporation of Geometric Algebra, cannot the Perceptron become a revived tool for neural computation? See the work of Sven Buchholz.

ReplyDelete