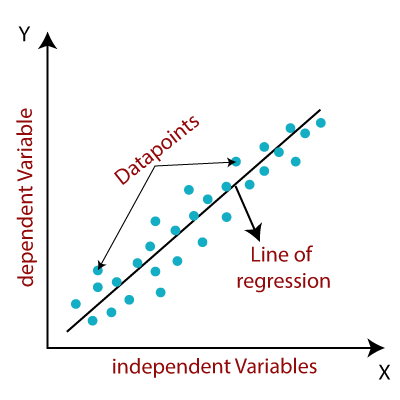

Linear regression is truly the workhorse of many AI and data science applications. It has its limits but they are often compensated by its simplicity, interpretability and efficiency. Linear regression has been successfully used in the following problems to give a few examples:

- prediction of click rates in online advertising

- prediction of retail demand for products

- prediction of box-office revenue of Hollywood movies

- prediction of software cost

- prediction of insurance cost

- prediction of crime rates

- prediction of real estate prices

Could we use regression to predict labels?

Linear regression and the nearest neighbor method produce different kinds of predictions. Linear regression outputs numerical outputs while the nearest neighbor method produces labels from a fixed set of alternatives (“classes”).

Where linear regression excels compared to nearest neighbors is interpretability. What do we mean by this? You could say that in a way, the nearest neighbor method and any single prediction that it produces are easy to interpret: it’s just the nearest training data element! This is true, but when it comes to the interpretability of the learned model, there is a clear difference. Interpreting the trained model in nearest neighbors in a similar fashion as the weights in linear regression is impossible: the learned model is basically the whole data, and it is usually way too big and complex to provide us with much insight. So what if we’d like to have a method that produces the same kind of outputs as the nearest neighbor, labels, but is interpretable like linear regression?

Logistic regression to the rescue

Well there is good news for you: we can turn the linear regression method’s outputs into predictions about labels. The technique for doing this is called logistic regression. We will not go into the technicalities, suffice to say that in the simplest case, we take the output from linear regression, which is a number, and predict one label A if the output is greater than zero, and another label B if the output is less than or equal to zero. Actually, instead of just predicting one class or another, logistic regression can also give us a measure of uncertainty of the prediction. So if we are predicting whether a customer will buy a new smartphone this year, we can get a prediction that customer A will buy a phone with probability 90%, but for another, less predictable customer, we can get a prediction that they will not buy a phone with 55% probability (or in other words, that they will buy one with 45% probability).

It is also possible to use the same trick to obtain predictions over more than two possible labels, so instead of always predicting either yes or no (buy a new phone or not, fake news or real news, and so forth), we can use logistic regression to identify, for example, handwritten digits, in which case there are ten possible labels.

An example of logistic regression

Let’s suppose that we collect data of students taking an introductory course in cookery. In addition to the basic information such as the student ID, name, and so on, we also ask the students to report how many hours they studied for the exam (however you study for a cookery exam, probably cooking?) – and hope that they are more or less honest in their reports. After the exam, we will know whether each student passed the course or not. Some data points are presented below:

Based on the table, what kind of conclusion could you draw between the hours studied and passing the exam? We could think that if we have data from hundreds of students, maybe we could see the amount needed to study in order to pass the course. We can present this data in a chart as you can see below.

| Student ID | Hours studied | Pass/fail |

|---|---|---|

| 24 | 15 | Pass |

| 41 | 9.5 | Pass |

| 58 | 2 | Fail |

| 101 | 5 | Fail |

| 103 | 6.5 | Fail |

| 215 | 6 | Pass |

Based on the table, what kind of conclusion could you draw between the hours studied and passing the exam? We could think that if we have data from hundreds of students, maybe we could see the amount needed to study in order to pass the course. We can present this data in a chart as you can see below:

Each dot on the figure corresponds to one student. On the bottom of the figure we have the scale for how many hours the student studied for the exam, and the students who passed the exam are shown as dots at the top of the chart, and the ones who failed are shown at the bottom. We´ll use the scale on the left to indicate the predicted probability of passing, which we´ll get from the logistic regression model as we explain just below. Based on this figure, you can see roughly that students who spent longer studying had better chances of passing the course. Especially the extreme cases are intuitive: with less than an hour’s work, it is very hard to pass the course, but with a lot of work, most will be successful. But what about those that spend time studying somewhere inbetween the extremes? If you study for 6 hours, what are your chances of passing?

We can quantify the probability of passing using logistic regression. The curve in the figure can be interpreted as the probability of passing: for example, after studying for five hours, the probability of passing is a little over 20%. We will not go into the details on how to obtain the curve, but it will be similar to how we learn the weights in linear regression.

If you wanted to have an 80% chance of passing a university exam, based on the above figure, how many hours should you approximately study for?

Your answer should be 10-11 hours.

Logistic regression is also used in a great variety of real-world AI applications such as predicting financial risks, in medical studies, and so on. However, like linear regression, it is also constrained by the linearity property and we need many other methods in our toolbox.

0 comments:

Post a Comment