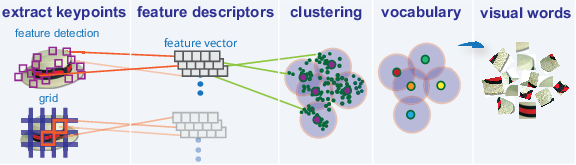

Bag of Word (BoW) Model is used to extract the features from text so that the text can be used in modeling in machine learning algorithms.

Now the question arises that why we need to extract the features from text. It is because the machine learning algorithms cannot work with raw data and they need numeric data so that they can extract meaningful information out of it. The conversion of text data into numeric data is called feature extraction or feature encoding.

This is very simple approach for extracting the features from text. Suppose we have a text document and we want to convert it into numeric data or say want to extract the features out of it then first of all this model extracts a vocabulary from all the words in the document. Then by using a document term matrix, it will build a model. In this way, BoW represents the document as a bag of words only. Any information about the order or structure of words in the document is discarded.

The BoW algorithm builds a model by using the document term matrix. As the name suggests, the document term matrix is the matrix of various word counts that occur in the document. With the help of this matrix, the text document can be represented as a weighted combination of various words. By setting the threshold and choosing the words that are more meaningful, we can build a histogram of all the words in the documents that can be used as a feature vector. Following is an example to understand the concept of document term matrix:

Example

Suppose we have the following two sentences:

- Sentence 1: We are using the Bag of Words model.

- Sentence 2: Bag of Words model is used for extracting the features.

Now, by considering these two sentences, we have the following 13 distinct words:

- we

- are

- using

- the

- bag

- of

- words

- model

- is

- used

- for

- extracting

- features

Now, we need to build a histogram for each sentence by using the word count in each sentence:

Now, we need to build a histogram for each sentence by using the word count in each sentence:

- Sentence 1: [1,1,1,1,1,1,1,1,0,0,0,0,0]

- Sentence 2: [0,0,0,1,1,1,1,1,1,1,1,1,1]

Term Frequency(tf)

It is the measure of how frequently each word appears in a document. It can be obtained by dividing the count of each word by the total number of words in a given document.

Inverse Document Frequency(idf)

It is the measure of how unique a word is to this document in the given set of documents. For calculating idf and formulating a distinctive feature vector, we need to reduce the weights of commonly occurring words like the and weigh up the rare words.

Building a Bag of Words Model in NLTK

We will define a collection of strings by using CountVectorizer to create vectors from these sentences.

Let us import the necessary package:

Let us import the necessary package:

from sklearn.feature_extraction.text import CountVectorizer

Now define the set of sentences.

Sentences=['We are using the Bag of Word model', 'Bag of Word model is used for extracting the features.']

vectorizer_count = CountVectorizer()

features_text = vectorizer.fit_transform(Sentences).todense()

print(vectorizer.vocabulary_)

vectorizer_count = CountVectorizer()

features_text = vectorizer.fit_transform(Sentences).todense()

print(vectorizer.vocabulary_)

The above program generates the output as shown below. It shows that we have 13 distinct words in the above two sentences:

{'we': 11, 'are': 0, 'using': 10, 'the': 8, 'bag': 1, 'of': 7, 'word': 12, 'model': 6, 'is': 5, 'used': 9, 'for': 4, 'extracting': 2, 'features': 3}

These are the feature vectors (text to numeric form) which can be used for machine learning.

In the next post we'll see how to use this model in solving problems.