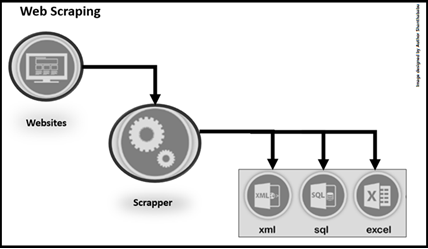

This is the process of extracting the diverse volume of data (content) in the standard format from a website in slice and dice as part of data collection in Data Analytics and Data Science perspective in the form of flat files (.csv,.json etc.,) or stored into the database. The scraped data will usually be in a spreadsheet or tabular format. It can be also called as Web-Data-Extraction, Web -Harvesting, Screen Scraping etc.

Is this Legally accepted?

As long as you use the data ethically, this is absolutely fine. Anyways we’re going to use the data which is already available in most of the public domain, but sometimes the websites are wished to prevent their data from web scraping then they can employ techniques like CAPTCHA forms and IP banning.

Crawler & Scraper

Let’s understand Crawler & Scraper:

What is Web Crawling?

In simple terms, Web Crawling is the set process of indexing expected business data on the target web page by using a well-defined program or automated script to align business rules. The main objective goal of a crawler is to learn what the target web pages are about and to retrieve information from one or more pages based on the needs. These programs (Python/R/Java) or automated scripts are called in terms of a Web Crawler, Spider, and usually called Crawler.

What is Web Scraper?

This is the most common technique when dealing with data preparation during data collection in Data Science projects, in which a well-defined program will extract valuable information from a target website in a human-readable output format, this would be in any language.

Scope of Crawling and Scraping

Data Crawling:

- It can be done at any scaling level

- It gives to downloading web page reference

- It requires a crawl agent

- Process of using bots to read and store

- Goes through every single page on the specified web page.

- Deduplication is an essential part

Data Scraping:

- Extracting data from multiple sources

- It focuses on a specific set of data from a web page

- It can be done at any scaling level

- It requires crawl and parser

- Deduplication is not an essential part.

0 comments:

Post a Comment