Convolutional neural networks are the same as ordinary neural networks because they are also made up of neurons that have learnable weights and biases. Ordinary neural networks ignore the structure of input data and all the data is converted into 1-D array before feeding it into the network. This process suits the regular data, however if the data contains images, the process may be cumbersome.

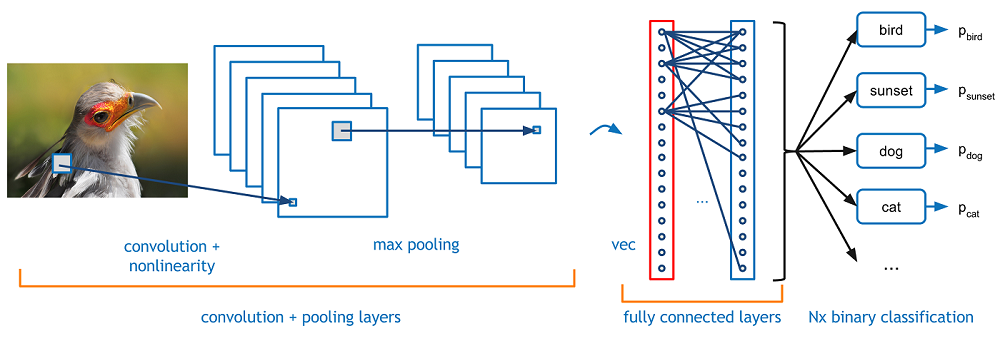

CNN solves this problem easily. It takes the 2D structure of the images into account when they process them, which allows them to extract the properties specific to images. In this way, the main goal of CNNs is to go from the raw image data in the input layer to the correct class in the output layer. The only difference between an ordinary NNs and CNNs is in the treatment of input data and in the type of layers.

Architecturally, the ordinary neural networks receive an input and transform it through a series of hidden layer. Every layer is connected to the other layer with the help of neurons. The main disadvantage of ordinary neural networks is that they do not scale well to full images.

The architecture of CNNs have neurons arranged in 3 dimensions called width, height and depth. Each neuron in the current layer is connected to a small patch of the output from the previous layer. It is similar to overlaying a 𝑵×𝑵 filter on the input image. It uses M filters to be sure about getting all the details. These M filters are feature extractors which extract features like edges, corners, etc.Layers used to construct CNNs

Following layers are used to construct CNNs:

- Input Layer: It takes the raw image data as it is.

- Convolutional Layer: This layer is the core building block of CNNs that does most of the computations. This layer computes the convolutions between the neurons and the various patches in the input.

- Rectified Linear Unit Layer: It applies an activation function to the output of the previous layer. It adds non-linearity to the network so that it can generalize well to any type of function.

- Pooling Layer: Pooling helps us to keep only the important parts as we progress in the network. Pooling layer operates independently on every depth slice of the input and resizes it spatially. It uses the MAX function.

- Fully Connected layer/Output layer: This layer computes the output scores in the last layer. The resulting output is of the size 𝟏×𝟏×𝑳 , where L is the number training dataset classes.

We can use Keras, which is an high level neural networks API, written in Python and capable of running on top of TensorFlow, CNTK or Theno. It is compatible with Python 2.7-3.6. You can learn more about it from https://keras.io/.

Use the following commands to install keras:

pip install keras

On conda environment, you can use the following command:

conda install –c conda-forge keras

In the next post we'll learn how to build a linear regressor using artificial neural networks.

0 comments:

Post a Comment